The local-ai-stack is a starter kit designed for building local-only AI applications, focusing initially on document Q&A functionalities. This kit is entirely cost-free to run and is written in JavaScript. The stack includes several key components: Ollama for inference, Supabase pgvector for VectorDB, Langchain.js for LLM orchestration, Next.js for app logic, and Transformer.js along with all-MiniLM-L6-v2 for embeddings generation.

To get started, users can fork and clone the repository, install dependencies, set up Ollama and Supabase locally, and configure necessary secrets. The kit also provides scripts for generating embeddings from document files and storing them in Supabase. Once set up, the application can be run locally for testing and further development. The kit also offers guidance on deploying the application using various cloud-based services if desired.

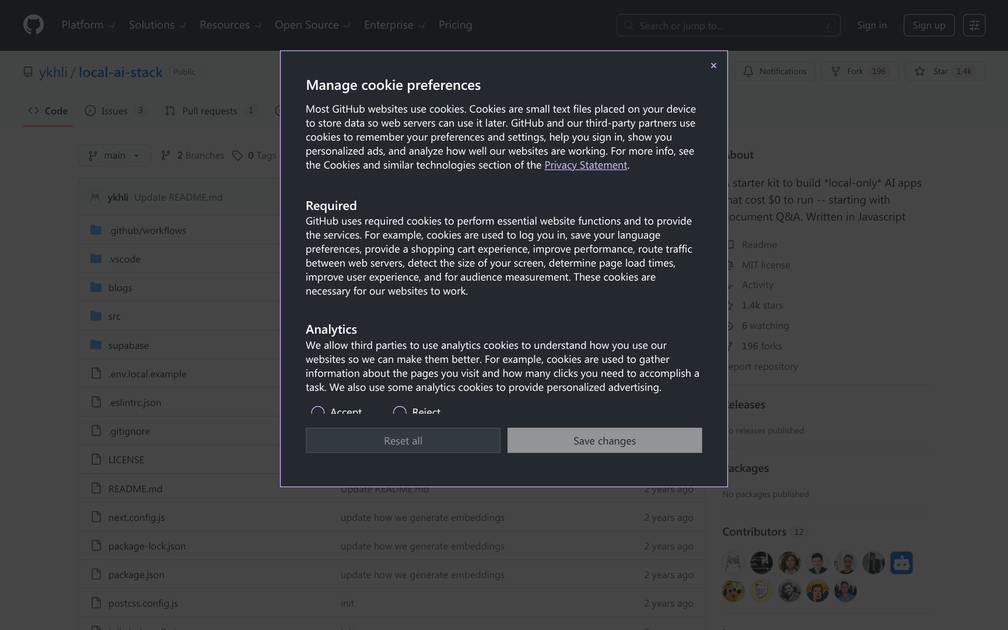

The project is open-source under the MIT license and actively maintained on GitHub, where it has garnered significant community interest with over 1.3k stars and 190 forks. The repository includes comprehensive instructions and resources for users to get up and running quickly.

Information shown may be outdated. Found an error? Report it here