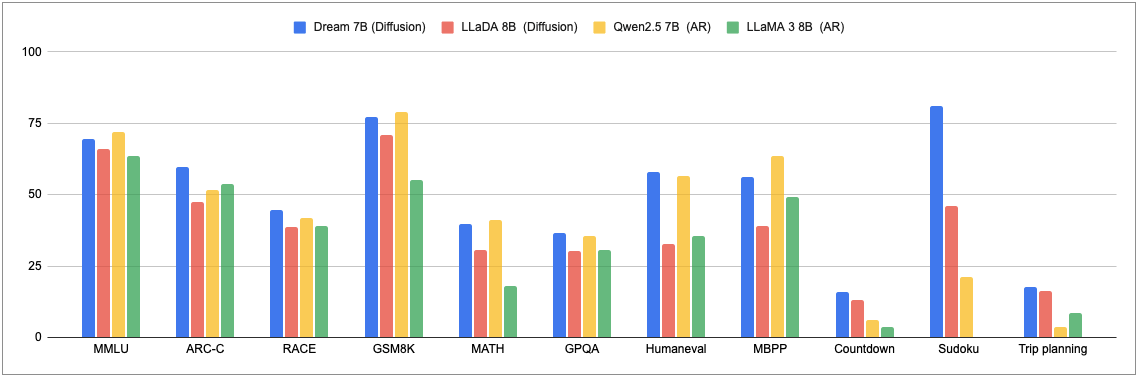

Dream 7B is an advanced open diffusion large language model (LLM) developed through a collaboration between Huawei Noah’s Ark Lab and the University of Hong Kong. It represents a significant leap in diffusion-based text generation, consistently outperforming existing diffusion models and matching or exceeding the capabilities of autoregressive (AR) models of similar size, such as LLaMA3 and Qwen2.5. Dream 7B excels in general-purpose tasks, mathematical reasoning, coding abilities, and advanced planning, while offering unparalleled inference flexibility.

Unlike traditional AR models that generate text sequentially, Dream 7B leverages discrete diffusion modeling, which refines entire sequences in parallel starting from a fully noised state. This architectural paradigm enables bidirectional contextual modeling, enhancing global coherence, and facilitates controllable generation through iterative refinement. Additionally, diffusion modeling introduces the potential for faster sampling and more efficient training objectives, making Dream 7B a compelling alternative to AR frameworks.

The model is trained using a mask diffusion approach on a diverse dataset of 580 billion tokens sourced from Dolma v1.7, OpenCoder, and DCLM-Baseline. Pretraining was conducted across 96 NVIDIA H800 GPUs for 256 hours, utilizing weight initialization from AR models like Qwen2.5 to accelerate learning. Dream 7B incorporates a novel context-adaptive token-level noise rescheduling mechanism, which dynamically adjusts the noise level for individual tokens based on their contextual importance, ensuring more effective learning and precise token generation.

Dream 7B demonstrates remarkable planning capabilities, outperforming similar-sized models in complex tasks like Countdown and Sudoku, even rivaling much larger models such as DeepSeek V3. Its diffusion architecture allows for flexible inference, enabling arbitrary order generation, infilling, and completion tasks. Users can dynamically adjust decoding parameters to balance quality and speed, offering a unique trade-off not available in traditional AR models.

To align the model with user instructions, Dream 7B underwent supervised fine-tuning using a dataset of 1.8 million pairs, achieving performance comparable to leading AR models. The diffusion framework also introduces opportunities for advanced post-training techniques, further enhancing its utility in diverse applications.

Dream 7B establishes a new benchmark for diffusion language models, combining efficiency, scalability, and flexibility. Its innovative architecture addresses key limitations of AR models, positioning it as a promising solution for applications requiring sustained reasoning, long-term planning, and contextual understanding.

Information shown may be outdated. Found an error? Report it here